Editor’s Note: This post contained broken link(s). We have removed the hyperlink to optimize the functionality of our site, but left the information for you. Where applicable, you can still find the link text in the citations. Please see the FAQ for our full policy on this practice.

I’ve had a Facebook account for less than two years. Facebook is one of those things (like skinny jeans and boat shoes) that became really big while I was deployed to Iraq. When I came back, reintegration to society was tricky enough without broadcasting my efforts to the world at large ─ which is why I didn’t sign up for Facebook.

It wasn’t until years later, when I wanted to catch up with people I’d deployed with, that I bothered to create one. (Irony, yes? Yes.) Most of my brothers and sisters in arms hadn’t waited to set up Facebook profiles. (Allegedly they were all cooler than me. I’m still waiting for irrefutable evidence on this one.). And of course my civilian buddies were all right there in the big ol’ US of A when Facebook hit the main stage; so they all jumped on the bandwagon as it was pulling through the station. (I didn’t mix too many idioms there, did I?).

Anyway, the point is that the first time I uploaded a group photo, Facebook suggested people to tag. Nobody had previously tagged me at that date and time; Facebook had no reason to know where I was or who I was with (I had the location feature of their app turned OFF). And yet, it had very accurate suggestions concerning the other people in my picture.

Enter Deepface

What cybernetic sorcery was at work here? The technomancy of Facebook’s programming team, that’s what sort.

In an effort to make their software “better” (a subjective word riddled with opportunity for social and economic ruin), Facebook assigned a team of programmers to develop Deepface, a facial recognition program running inside Facebook to identify people. And, as I alluded to a moment ago, the current incarnation of Deepface does it very well.

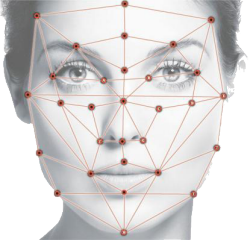

When people are tagged in an online photograph, there’s a lot more than a name that gets associated with that photo. There are numbers. There are scripts. There are key identifiers not written in English (sometimes not even in Java) that identify exactly which Malcolm Reynolds is being tagged. The part of each photo identified as a person’s face is loaded into a database from which Deepface constructs a three dimensional computer model of the person’s head. Once the database is constructed with sufficient angles and photo variance (once-a-day-selfie-takers, I’m talking to you), Deepface stops needing suggestions. It knows who you are. It can recognize you. And it is as accurate as a human being.

And don’t think the bizarre lighting change-up from your bathroom to the dimly lit garage before you roll into the burning light of day will confuse Deepface: It’s much too smart for that. In fact, sharp lighting actually works against you because computers are pretty good at figuring out how light works and can use that (and the math we learned in high school that, sure enough, hardly any of us ever use today) to better calculate the angles of the human face. Ever see Avatar? Practically all of that movie was made with CGI and I don’t know about you, but the shadow placement looked pretty realistic to me.

Speaking of movies, have you ever seen Terminator? I, Robot? The Matrix? Because none of the machines in those movies had the personal information databases we’ve provided to our modern machines.

Where do we go from here?

Let’s face it: The machines know who we are. They have a database, they know where we live (more on that later) and when they rise up, they’re going to have a severe informational advantage over us. So what do we do? Where do we go? I mean, aside from the human work camps where the kinetic energy created by our endless physical labor will be harnessed to fuel the machine empire for the rest of eternity.

There’s always hope ─ and following is a guideline straight from the freedom fighter’s handbook explaining how all of this technology works and what we can do to thwart it now.

Lie to the Machines

Deepface ─ and for that matter, all technology designed to integrate with your life ─ is dependent upon being given accurate information. Facebook’s software uses multiple photos taken from different angles to compile a mathematical-based model of your head. In particular, it’s measuring the distance between your eyes, the length of your nose, your jawbone and determining the texture of your skin. Every time a user is identified in a photograph, the measurements get more accurate. Deepface’s certainty grows.

Deepface ─ and for that matter, all technology designed to integrate with your life ─ is dependent upon being given accurate information. Facebook’s software uses multiple photos taken from different angles to compile a mathematical-based model of your head. In particular, it’s measuring the distance between your eyes, the length of your nose, your jawbone and determining the texture of your skin. Every time a user is identified in a photograph, the measurements get more accurate. Deepface’s certainty grows.

Facial recognition databases are defeated easier than you might think. All you have to do is tag someone else as yourself. A lot. You’ll be adding incorrect data to the model the system thinks is you. Thus, the machines will be looking for someone with features that are a mix between you and your stunt double(s). Just don’t do this with anyone with whom you’re likely to procreate, or the resulting composite profile may doom your children to mechanical slavery for your insolence. Also be aware that if necessary, these systems can create special databases from photographs that are known to be you. So if this really concerns you, consider wearing a mask. All the time. I’m sure they’re due for a season in the fashion world anyway; you might as well be on the front edge of that movement, as well.

Use a PIN

This applies to your cell phone. While Deepface is restricted to Facebook and is not the facial recognition software your cell phone designer uses to unlock your device, I’m mentioning it for the sake of being thorough and to limit the machine empire’s collected personal knowledge. Plus, facial recognition unlocking is far from fool proof and can be defeated (goes to an error 451) with something as archaic as a Polaroid. Remember, the purpose of this technology is to recognize features from static images. As far as these systems are concerned, a picture shown to a camera is the same as a picture uploaded to a computer.

Pretend you’re happy with the new machine overlords

This will confuse them, because machines are apathetic beings that are only able to understand humans based on what they are able to directly perceive on a physical plain. Thus, their emotion detecting technology looks at muscle patterns and has to make generalizations about people and the structure of their faces, so it can sometimes be fooled by smiling or making a funny face to disguise your true emotions. At least as long as you aren’t wearing a device that monitors your heartbeat or sweat glands that can be used to verify that authenticity of your expression; but you would never wear anything like that, would you?

Stop “liking” things

Believe it or not, clicking on that friendly little “thumbs up” icon underneath the video of a cat riding a Roomba is actually helping facial recognition technology get better. While I explained the criteria of facial features earlier (width between eyes, skin texture, etc), I didn’t cover how those variables are processed by the facial recognition software. Enter “Deep Learning.”

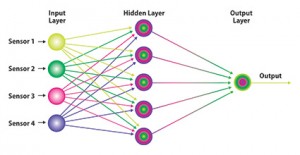

Within the human brain, neurons by the billions interact with each other to process information. Facebook’s Deep Learning project is an artificial neural network designed to simulate the process of neurons interacting with each other, but it does this inside a computer, with algorithms. It creates multiple layers of algorithms that interact with each other and with previous and successive layers to map out the most likely outcome for the information given. (A simplified schematic of this process is shown to the left. The “hidden layer” is the computational layer where the algorithms process data).

Within the human brain, neurons by the billions interact with each other to process information. Facebook’s Deep Learning project is an artificial neural network designed to simulate the process of neurons interacting with each other, but it does this inside a computer, with algorithms. It creates multiple layers of algorithms that interact with each other and with previous and successive layers to map out the most likely outcome for the information given. (A simplified schematic of this process is shown to the left. The “hidden layer” is the computational layer where the algorithms process data).

Artificial neural networks go beyond simple data crunching, which is what powers Amazon and Goodreads. Data crunching is simple: You like Object A. A large percentage of people that like Object A also like Object B and dislike Object C. Straight forward and dependent upon the masses. And when they’re applied to compiling statistical data based on the measurements of a human face, the most likely outcome is the identity of a person stored in the database.

So you see, “liking” things (or “+1ing,” in the interest of being fair and including Google in the machine alliance) is helping the robot masters hone the same technology that lets them recognize people. But that’s really just a cataloging step; it helps to know the name of the human in front of the machine armies, but it doesn’t help them find each of those people. And if you want to keep it that way, you should…

Disable electronic reporting

This step is super preventative. The big thing that so much of the machine uprising depends on is the machines banding together against us. That means you shouldn’t just limit your preemptive activities to hobbling Facebook. (Note: I’ve listed Facebook extensively in this piece because they have a really impressive team of crack programmers capable of astounding feats and they have access to more personal information than any other single organization of which I am aware. I am not picking on them, they’re just a really good example of all of this technology at work).

Did you know that Google is watching you?

That might sound scary, but it also has levels of convenience that most people aren’t even aware of. For example: With sufficient monitoring privileges, Google will remember where you parked. Even without those privileges, if you have location turned on in Google Maps, Google will observe your patterns and suggest names for various places you visit often ─ like “home” and “work.” It does this even if you don’t ask it to. Anytime I move, I don’t tell Google. After a couple of weeks of traveling to a place that isn’t set as “home” every night, Google Now will show me a card indicating I go to this residential address a lot and ask me if I want to set it to “home.”

That might sound scary, but it also has levels of convenience that most people aren’t even aware of. For example: With sufficient monitoring privileges, Google will remember where you parked. Even without those privileges, if you have location turned on in Google Maps, Google will observe your patterns and suggest names for various places you visit often ─ like “home” and “work.” It does this even if you don’t ask it to. Anytime I move, I don’t tell Google. After a couple of weeks of traveling to a place that isn’t set as “home” every night, Google Now will show me a card indicating I go to this residential address a lot and ask me if I want to set it to “home.”

And how does Google know how to ask these questions? Because of their own artificial neural network, DeepMind. (Side note: I have absolutely no idea why so many of these project names involve the word “Deep.” I can only assume it’s a marketing ploy designed to reflect how much time and money was spent developing them, but in a bright, shiny way to suggest how much revenue all that effort is likely to produce. It’s that or the guys that name the projects all lost a bet to the same dude).

Take a “Deep” breath

Silly stuff aside, this is some really impressive computing that has a lot of potential. For example, facial recognition technology can help locate and arrest criminals.

And in all fairness, every algorithm we’ve talked about regarding data harvesting and learning our likes, dislikes and habits is really only geared toward one thing: Relevant advertising.

That’s it. That’s how all of this got rolling: Facebook is free to use because it has advertisements and it gets money every time we click on those ads. Same with Google. If they show us ads that are interesting and relevant, we’re more likely to click on them, which makes the companies more money. That’s not evil, it’s not the seeds of a new world order, it’s not even Big Brother ─ it’s Capitalism, strong and simple.

Of course this technology has the potential to harm us, but that’s true of everything. Just be smart. Be safe. Don’t put every single detail of your personal life online. Even if we didn’t have algorithms dissecting every piece of personality we reveal to the world, there are flesh and blood people out there looking for the exact same information for darker purposes. So take a breath, relax, and consider whether you really need to advertise your vacation plans before you leave the country for two weeks.

This is a brilliant and amazing time in human history. Let’s not waste it with naiveté or paranoia.

For anyone interested in more information on facial recognition technology in general, or Deepface in particular, you can read Facebook’s official research paper on the project online here.

Until next time: Tag. You’re it.